Last modified: 2025-03-16 22:22:12

< 2025-03-15 2025-03-17 >So I think the plan is to switch to using an intermediate arithmetic tree representation in between the document tree and the GLSL. And then we can:

So that will want to be the "CAD kernel" for Isoform, which can be ripped out and made into a separate "project", provisionally called "Peptide".

And this guides the kind of work I should be doing on Isoform in the immediate term: UI improvements are totally irrelevant, I only need to be working on things that will affect the kinds of SDFs that we need.

So I should add more node types:

Primitives:

Modifiers:

In particular I think we can't have conditionals in the SDFs, because you can't do interval arithmetic on them. You'd have to evaluate both sides and then take the min and max.

I'm also keen to find out if there's a good way to do "tape shortening" in GLSL. We could have each min/max node set a flag to say if it should go one way or the other, and as long as we are continually iterating within the same interval we let the flags persist, and if we need to evaluate a different interval we reset all the flags? Can you do better than resetting all the flags? Remember what they were from the previous run? You only need to stash 1 extra set of flags, because you only ever "backtrack" 1 level (either you take the first half of the interval or the second).

And I still really want to do the thing where you can use some modifier function to deform the domain of a LinearPattern or whatever, without deforming the actual object.

Or, for example, combine a distance deformation with a lattice, so that we only roughen it in a gyroid pattern. So we'd be using the lattice (or any other object) to mask the deformation.

But I can start with the primitives/modifiers above and get SDFs for them so that I have something to work from when making Peptide.

Lattices: https://en.wikipedia.org/wiki/Triply_periodic_minimal_surface

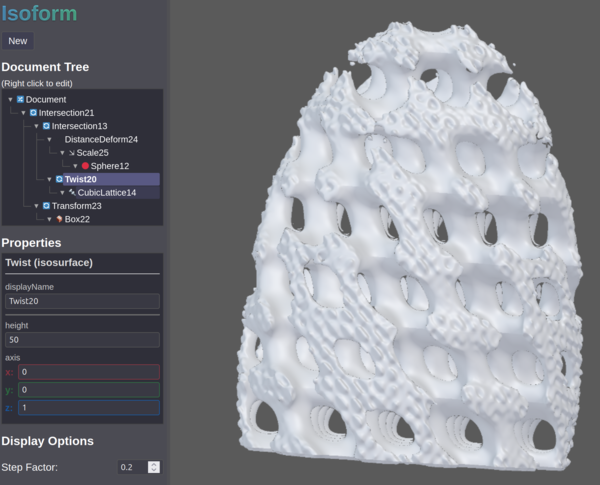

I've been messing around in Isoform a bit and made this shape:

The rendering is a bit bad because I had to reduce step factor because of the chamfer on the bottom.

I kind of want to 3d print this object, just to hold it in my hands, but I still don't have support for meshing.

Meh, I guess the quickest way is to do "Peptide" first and then use it to implement marching cubes.

How would you do a helical extrude? You need to calculate the distance to the closest point on the extrusion, which I don't really see how you could do?

There is a project called "RayTK" that has https://t3kt.github.io/raytk/reference/operators/sdf/helixSdf

There are lots of other potentially useful SDFs in that project as well.

That takes an input for a "cross section shape". It also lets you specify some of the parameters as "fields" instead of constants. Is this something I should be doing?

Internally, we could have "field" nodes which just return some value, and then we can have "add field" and "multiply field", for both domain and distance. "Add field" takes a vector field, "Multiply field" takes a scalar field.

So translation would add a constant vector to the domain.

Uniform scaling will multiply the domain by a constant scalar.

One massive advantage of the binary search renderer is that we still render the 0 surface perfectly even if the distance field is totally wrong. (But we still care about the distance field for other reasons).

The picture from the other day:

Showing a thickness of a chamfered PolarPattern of boxes.

If I add a "renormalise" function that divides the distance by the local gradient (or... multiplies by?), will that fix this?

No, it doesn't work. It has some discontinuities, which then makes the Thickness operation very badly discontinuous.

I'm going to see if I can make it do raymarching again a couple of times after the first hit, with the sign of the function inverted each time, and blend the results together.

Works really well!

That's with 3 extra rays per pixel. First one to the first surface as normal, then 1 to the back surface, then another front surface, then another back surface.

You can just about see that the torus continues as a solid on the inside of the thicknessed prism!

And I've added a slider to the UI to control opacity. Great success.

Controlling opacity is really useful with complicated objects so that you can see through things that are blocking your view.

It would probably be useful to be able to control opacity of document subtrees. More generally, we could have each node return not only a distance to the surface, but also a "material" (which we can blend with smooth union etc.), and then the eventual will be chosen according to the material that the SDF gave us.

This does kind of kill the shader compilation time even more though.

Using Matt Keeter's idea explained in https://www.youtube.com/watch?v=UxGxsGnbyJ4&t=85s

OK, some more thought on this. We have the shader generator write a struct like:

struct TapeShorteningFlags {

bool min1_left = true;

bool min1_right = true;

bool min2_left = true;

bool min2_right = true;

};

TapeShorteningFlags activeFlags;

Which say whether we need to evaluate the left node or the right node for each min/max operation. They all start off set to true (or false if that's easier).

When we evaluate a min/max operation, we first look at its current flags. If it says we can skip one of the operands then we just return the value of the other operand.

Otherwise we evaluate both, and then decide whether we could have

skipped one of the operands. If the result intervals are

[0.1, 0.25] and [10.0, 25.0], then we only needed to evaluate the

first operand to get the correct min result. So we set

activeFlags.min1_right = false so that next time we don't evaluate it.

And then in the binary search loop, before we evaluate the SDF over the

interval, we back up the existing activeFlags, then evaluate the SDF,

and if we hit the surface in the second half then

we restore the backed up activeFlags and carry on (otherwise we don't

need to restore it because the next evaluation will be a strict subset

of this one).

And then... great success, tape shortening in a fragment shader!

I don't think the branching in min/max operations will be a problem because a.) it mostly won't cause warp divergence, and b.) the branch will be very short so it's not too costly to execute both branches anyway.

Oh. But if we are turning all the arithmetic into straight-line code, then at the point of reaching the "min" instruction, we've already evaluated its arguments. We could make all the inputs to "min" conditional, but that then inhibits turning the tree into a DAG, because we might want the arguments somewhere else. We could test all the min/max flags that require a certain assignment? Seems expensive.

We could make min and max into "synchronisation points" or something, so every operand of min/max has to be its own DAG, with no references to elsewhere in the tree. And then we test the flag before evaluating all the code for each argument and we know that it won't then skip out anything that is useful elsewhere, at the cost of potentially duplicating some operations.

I think the "syncronisation points" (what's a better word?) idea is best. I don't want the shader code to get super complicated. A conditional to decide whether to run the straight-line code for each argument of each min/max is fine.

So the evaluation function might look something like:

iafloat sdf(iavec3 p) {

iafloat f0, f1, f2, f3;

iavec3 v0, v1, v2;

if (activeFlags.min1_left) {

v0 = p;

v1 = iavec3_new(1.0, 0.0, 0.0);

v0 = v0 - v1;

f1 = ilength(v0);

f2 = 1.0;

f1 = f1 - f2;

}

if (activeFlags.min1_right) {

v1 = p;

v2 = iavec3_new(0.0, 1.0, 0.0);

v1 = v1 - v2;

f2 = ilength(v1);

f3 = 1.0;

f2 = f2 - f3;

}

if (!activeFlags.min1_right) f0 = f1;

else if (!activeFlags.min1_left) f0 = f2;

else {

if (f1.y < f2.x) {

activeFlags.min1_right = false;

f0 = f1;

} else if (f2.y < f1.x) {

activeFlags.min1_left = false;

f0 = f2;

} else {

f0.x = min(f1.x, f2.x);

f0.y = min(f1.y, f2.y);

}

}

return f0;

}

For the union of 2 unit spheres.

So we're declaring our "registers" of iafloat and iavec3 upfront.

We're referring to the global activeFlags to decide which arguments

to min we need to evaluate, and then we're updating the flags to

mask off one of the arguments if we found that the other argument

is strictly smaller.

If we're going to be doing integer arithmetic, is it worth also doing automatic differentiation to work out the normals?

Currently we work out the normals with central differences, which is 6 additional SDF evaluations per ray hit. We could use "forward differences" which is only 3 additional evaluations. If you assume you want maybe 20 iterations of binary search, then automatic differentiation would have to add less than ~15% overhead to be worth it.

We could provide 2 implementations of the SDF: one with interval arithmetic and no normals, and one with ordinary arithmetic and automatic differentiation, and on the last iteration of the loop we switch to the version that gives us normals. That way we don't waste time computing normals where we don't need them, and we don't waste time doing interval arithmetic where we don't need it.

< 2025-03-15 2025-03-17 >