Last modified: 2025-03-18 16:05:21

< 2025-03-14 2025-03-16 >Currently a lot of the "Modifier" nodes compute rotation matrices in the shader, even though they're static. I think we should precompute those in JavaScript.

It's possible that the shader compilation step is doing this for me? Unsure, will see whether it is any more performant afterwards.

Loading up the page on my laptop with the default object, it ends up at resolution scale 0.931.

But actually, this doesn't use any of Twist,LinearPattern,PolarPattern, which I think are the only ones

that compute rotateToAxis() in the shader.

With all 3 of those, it took 42 seconds (!) to compile the shader. And resolution scale settles at 0.608.

So now let's compute the matrices in JavaScript and pass them in.

It actually doesn't seem to have hardly helped at all? I wonder if the shader compiler has really effective constant folding?

Also, I may be able to define my "utility" functions in one shader file that I compile once, and then not recompile all the same stuff every time when recompiling the user shader?

How can we do shader profiling? I am interested in profiling both compile time and runtime.

The next idea is if we can make union etc. check the bounding spheres and skip computations that can't affect the union?

Well I tried that but it also made performance worse??

I have reduced some branching in the shaders, doesn't seem to have helped much though.

I was curious if generating the shader code was slow, but it is like 0 ms to generate the shader code, almost all of the time is spent in compiling it.

I have found an improvement by breaking the ray marching loop sooner when we've travelled a long way (10x the camera distance from origin) and the distance to the object is increasing (i.e. locally moving away from it), as a heuristic that we've gone past it.

For some reason the SketchNode shader is incredibly expensive to compile?

The default object takes about 1250 ms for me, which goes down to 405 ms if I delete the sketch. So why is that slow?

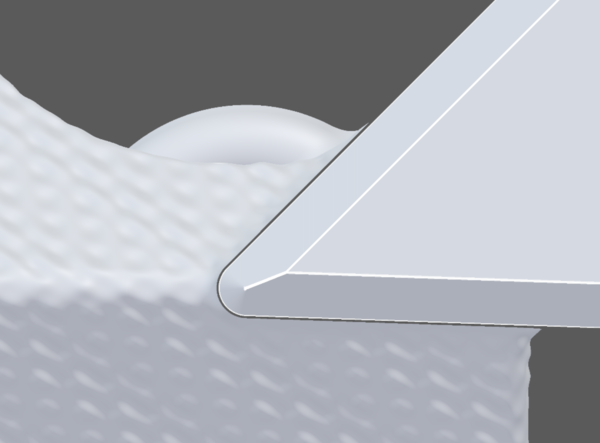

I have artifacts like this:

Where the ray parallel to the triangle surface, and close to it, only gets to march along in tiny steps, and gives up before reaching the surface of the cube.

Gyroid is pretty cool and easy to do https://www.youtube.com/watch?v=gGwjJUD-OsI

I watched the Matt Keeter talk https://www.youtube.com/watch?v=UxGxsGnbyJ4 last night. I really like the idea of having the nodes return some intermediate tree representation of their function, and then optimising that and turning it into GLSL code. That would also give a convenient way to evaluate the nodes in JavaScript without needing them to provide 2 separate implementations. And it would give more opportunity to generate optimised GLSL.

If we pre-rotate the scene so that the camera is always pointing along the Z axis (we may already have this condition?), then we could potentially use the interval arithmetic idea to binary search the "ray" to work out where the surface is? Instead of naive ray marching. That would tell us if we shoot off to "infinity" (defined as 1000 let's say) in a single evaluation. And if the ray with Z from 0 to 1000 crosses the boundary, then calculate the ray from 0 to 500 and see if that one crosses the boundary. And if not, calculate the ray from 250 to 500, and so on, until you are within 0.001 of the boundary, which will be 20 steps.

We'd want our arithmetic implementation to have float/vec3/mat3/bool types, and then just copy Matt Keeter's register allocation thing to turn it into straight-line GLSL code. Potentially could even use/modify Fidget to do it?

One thing that concerns me is that the SketchNode shader code basically turns your

sketch into straight-line GLSL code that reuses the same variables over and over,

and it's super slow? What is up there?

I read some notes on maybe https://dercuano.github.io/notes/sdf-notes.html or a related site, about how the final resolution at which you stop raymarching can simply be the distance between pixels at the appropriate depth. Since I am using orthographic projection the pixels are always a fixed distance apart, so that is even easier.

I have come across https://www.shadertoy.com/view/lssSWH which is a GLSL implementation of interval arithmetic.

So the plan now is to set up some scene in shadertoy with both my current ray marcher, and one of equivalent quality but binary searching with interval arithmetic, and see which is faster.

OK, amazing! I have a SDF raymarcher with interval arithmetic working in shadertoy.

So now the plan is to make the scene more complicated and see which one starts dropping the most frames? Or just make it run the scene 100 times per frame?

The basic version drops to 10 fps if I make it run 1000 extra rayMarch's per frame.

The interval arithmetic version drops to 10 fps if I make it run only 100 extra rayMarch's per frame.

So at first glance the interval arithmetic version is slower. What if I reduce the number of steps on each as far as I can without causing visual errors?

With binary search version having Z range of 2000 units (2 metres in CAD, quite low), and 35 steps, the picture looks identical to me, including the boundary.

And now runs 1000 extra rayMarch's at 14.5 fps.

Basic version needs about 100 steps to not show any visual errors, and runs 1000 extra rayMarch's at 15.7 fps. So still winning but not by a lot.

The basic version stops when distance is within 0.001 - how many steps of binary search is that? log base 2 of 2 million is ~21. So we ought to be able to manage with only 21 steps of binary search? But that gives weird artifacts, so what is going wrong?

It would be possible to start out with the basic version and then binary search once the distance to the surface gets small. Maybe if we even just do only 1 step of the basic version to start us off? Evaluate the SDF at the camera position, jump forward by that far, and then binary search to find the surface? Looks like it saves... 1 evaluation. So it saves none because you do an extra 1 upfront. Need to check again after I have worked out what is causing the visual artifacts when the number of steps is too low.

Oh! It's because I was using the last d value to see if we hit the surface - but there's

a 50% chance that that was a "failed" test that landed before the surface. If we enter

the loop then we 100% know that the interval contains the surface, so we definitely

have a hit.

Now only needs 15 steps to look indistinguishable from perfect. And I don't think the initial 0-interval evaluation is worthwhile. 16 steps, no initial evaluation, seems good. Now how many fps for 1000 extra runs?

37 fps! Nice, more than twice as good as the naive version. And it comes with a guaranteed minimum interval size after 16 steps, instead of "just hoping" to get close. And for geometry with more faces perpendicular to viewing axis, this will be even better. A single sphere is basically easy mode for the naive version.

It gets down to 10 fps if I make it 4000 extra rayMarch's, so basically it can do 4x as many rays per frame as the naive version, on this scene, and we expect it to get better for more complex scenes (do we?).

Pros:

Cons:

So maybe the plan is to do the compiler thing like in Fidget, but compiling to GLSL? But then you have to rewrite all your code to suit your compiler?

What operations do my SDFs use so far?

And then what would the API look like? I guess we construct an expression tree in JavaScript. Then serialise single static assignment from the tree, then "register allocate" and write straight-line GLSL code. The expression tree would work basically the same as the document tree.

And is there a good way to do "tape shortening" in GLSL? That's the idea where if

we notice that one of the arguments to min() has its min and max smaller than the other

argument, then the other argument doesn't need to be evaluated next time if we split

the interval in half. (But they might still need to be if we pick the "other" half of

the parent interval).