Trying to learn about the mind by analysing the brain is like trying to learn about software by analysing the computer. The only reason anybody thinks neuroscience is related to minds is because we're not familiar with minds that aren't made out of brains. But brains are just the mechanism that evolution landed on, they're not fundamental. Our planes don't fly the same way birds do, and attempts to discover principles of flight by analysing birds were not successful. Flight is actually much simpler than birds! Maybe the principles of consciousness are much simpler than brains.

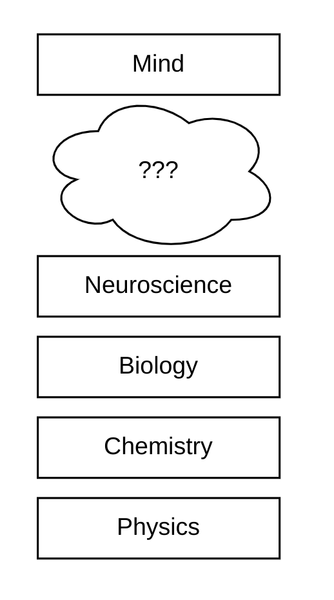

Here's a rough sketch of the stack that implements human minds:

We are approaching the problem from two different directions. We can study the physical properties of the brain (working upwards), and we can introspect on thoughts (working downwards). You'd think this would allow us to meet in the middle and understand the system all the way to the bottom.

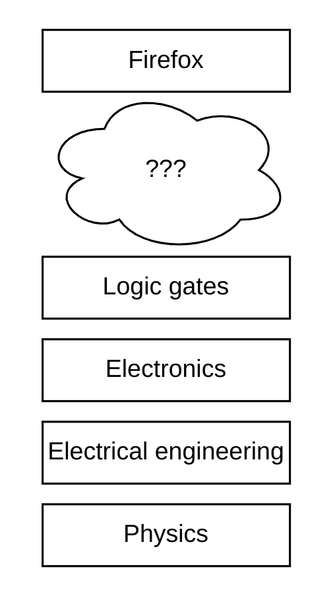

Sadly not. The cloudy "???" section may have unimaginable complexity that is neither physically a part of the brain, nor accessible to introspection. Just imagine trying to understand how a web browser works if you understand nothing beyond electronic logic gates, except that you get to point and click in the GUI.

You would have no idea about the unimaginable tower of software complexity sitting underneath Firefox, and no studying of logic gates will explain it. Firefox seems to behave like a completely different type of thing to logic gates. And it is a completely different type of thing. You need to understand an enormous stack of abstractions before you can comprehend Firefox in terms of logic gates.

I think trying to understand the mind in terms of neuroscience similarly disregards the tower of complexity that lies in the cloudy "???" zone.

Furthermore, the transistors and the logic gates are not necessary for Firefox to function. They're just a substrate on which we can perform computation. Any substrate would do! Similarly, I think brains and neurons are not necessary for minds to function: they're just a substrate which can interface between biology and whatever magical yet-to-be-discovered abstractions lie in the "???" layer.

And you may think that understanding those abstractions must be very complicated. Well, the way birds work is very complicated, but the principles of flight are not, and flight only seems complicated if you try to understand it by trying to understand birds.

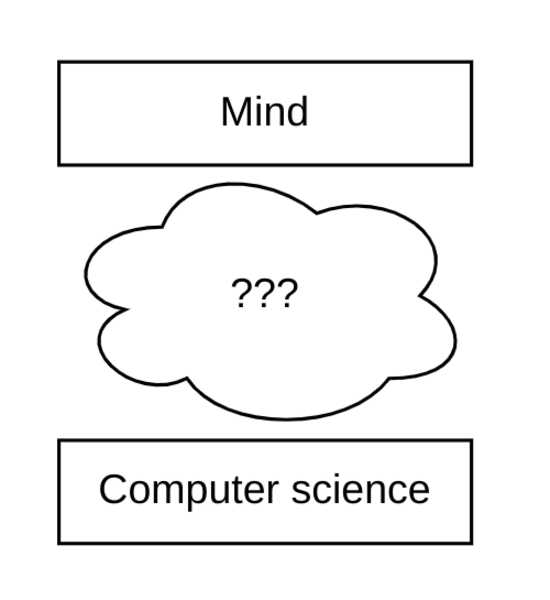

So if not neuroscience, then who will help us with the hard problem of consciousness? I think the problem lies at the intersection of computer science and philosophy. The "mind" part is very obviously the domain of philosophy. I think that if you work your way upwards from "neuroscience", you eventually derive a biological implementation of computer science, and then you're stuck again.

Why do I think that? I claim that a brain is purely an information-processing machine. (If you disagree, your counter-argument should probably start by outlining some proposed input or output of the brain that it would not be possible to encode numerically).

We know from the Church-Turing thesis that general-purpose computers can compute all computable functions. Since the brain is (I claim) just computing a function, all of the operations of a brain can be performed by general-purpose computers. And since (I claim) the mind is implemented on the brain, it therefore must be possible to implement a mind in software. I doubted this before but I am a believer now.

So let's skip all the hard work of explaining how neuroscience creates computer science (a worthy and interesting field of study, no doubt, but not the one I'm interested in right now). Let's assume that it is true that working upwards from neuroscience eventually reaches computer science and, given that we already have a way to implement computer science, reduce the problem to this:

Much more tractable!

Now assume we have developed a candidate "software mind", that might have its own conscious internal experience. How would we find out? How would we convince others? We can't just hook it up to a text chat and ask it, because it might say it's conscious even if it is not. We need to be able to show that it is truly conscious.

So before we start building minds in software, we ought to agree on a way to classify them. Traditionally this is very difficult, because the only way to decide whether a person has consciousness is to experience it for yourself. Since you can only access your own internal experience, the only person whose consciousness you can be sure of is yourself.

Currently our best heuristic for determining whether something else is conscious is to check how much it behaves like a human. Humans: as conscious as it gets. Apes: very conscious. Dogs: quite conscious. Plants: vegetative. Rocks: nothing going on, nobody home, not at all conscious.

This works surprisingly well in ordinary daily life, but is not very effective at identifying a mind that isn't like a human. It would be like checking whether a plane can fly by measuring how much it looks like a bird.

If we are creating minds in software, however, we have the advantage that we have total access to all of the internal state of the system, while it's running. So what things would we look for in a hypothetical software mind to determine whether or not it's conscious?

I don't know. But maybe answering that would give us some pointers on how to construct one.