Today we're soft-launching AI Test User. It's a robot that uses Firefox like a human to test your website and find bugs all by itself. If you have a site you'd like to test, submit it here, no signup required.

It's a project that Martin Falkus and I are working on, currently using Claude Computer Use. The aim at the moment is just to do test runs on as many people's websites as we can manage, so if you have a website and you're curious what the robot thinks of it, fill in the form to tell us where your website is, how to contact you, and any specific instructions if there's something specific you want it to look at.

AI Test User aims to provide value by finding bugs in your website before your customers do, so that you can fix them sooner and keep your product quality higher.

The way it works is we have a Docker container running Firefox, based on the Anthropic Computer Use Demo reference implementation. At startup we automatically navigate Firefox to the customer's website, and then give the bot a short prompt giving it login credentials supplied by the customer (if any), any specific instructions provided by the customer, and asking it to test the website and report bugs. We record a video of the screen inside the Docker container so that the humans can see what the machine saw.

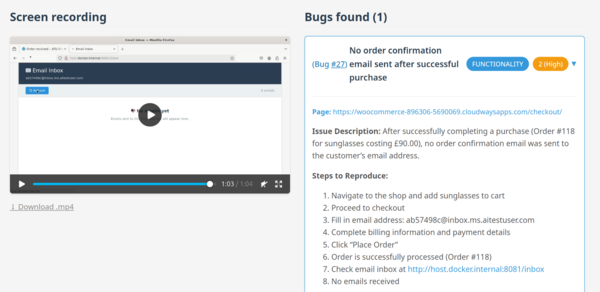

It has a tool that it can use to report a bug. Attached to each bug report is a screenshot of Firefox at the time the bug was detected, and a reference to the timestamp in the screen recording, so when you load up the bug report you can see exactly what the bot saw, as well as the bot's description of the bug. We ask it to report bugs for everything from spelling errors and UI/UX inconveniences, all the way up to complete crashes.

I have put up a page at https://incoherency.co.uk/examplebug/ that purports to be a login form, except it is deliberately broken in lots of fun and confusing ways. Cursor one-shotted this for me, which was great. Haters will say Cursor is only good at writing weird bugs at scale. I say it's not only good at writing weird bugs at scale, but you have to admit it is good at writing weird bugs at scale. You can see an example report for the examplebug site, in which the AI Test User made a valiant but futile effort with my Kafkaesque login form that keeps deleting the text it enters.

For a more realistic example run, you could see this report for an example shop. It goes through a WooCommerce shop, finds the product we asked it to buy, checks it out using the Stripe dummy card number, and then checks that it received the confirmation email. It reported a bug on that one because it didn't get the confirmation email.

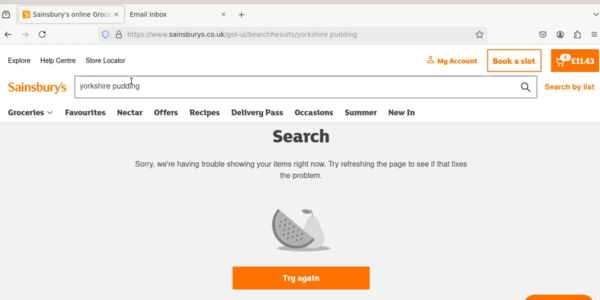

Or you could see it ordering roast dinner ingredients from Sainsbury's, stopping just short of booking a delivery slot. Apparently Sainsbury's don't have Yorkshire puddings?? I'm not sure what went wrong there, but AI Test User dutifully submitted a bug report. Good bot.

I've been reading Eric Ries's "The Lean Startup" recently, and I have worked out that despite best efforts, we have actually already done too much programming work. The goal should be to get a minimum viable product in the hands of customers as soon as possible, so we should not have even implemented automatic bug reporting yet (let alone automatic bug deduplication, which seemed so important while I was programming it, but could easily turn out to be worthless).

We also give the machine access to a per-session "email inbox". This is a page hosted on host.docker.internal that just lists all of the emails received on its per-session email address. This basically only exists to handle flows based around email authentication. It doesn't have the ability to send any emails, just see what it received and click links. (Again, maybe should have skipped this until after seeing if anyone wants to use it).

One issue we've run into is the classic "LLM sycophancy" syndrome. Upon meeting with a big red error message, the bot would sometimes say "Great! The application has robust validation procedures", instead of reporting a bug! We don't have a great fix for that yet, other than saying in the initial prompt that we really, really want it to report bugs pretty please. It seems we are all prompt engineers on this blessed day.

We don't really know yet what to do about pricing. There is some placeholder pricing on the brochure site but that could easily have to change. One of the issues with this technology at the moment is that it's very slow and very expensive. Which people might not like. Being very slow isn't necessarily a problem, we have some tricks to automatically trim down the screen recording so that the user doesn't have to sit through minutes of uneventful robot thinking time. But being expensive definitely is a problem. We are betting that the cost will come down over time, but until that happens either it will have to provide value commensurate with the cost, or else it isn't economically viable yet. Time will tell.

We have found that the Anthropic API is not as reliable as we'd like. It's not uncommon to get "Overloaded" responses from the API for 10 minutes straight, meanwhile status.anthropic.com is all green and reporting no issues at all. We've also tried out an Azure-hosted version of OpenAI's computer-use service, and also found it very flakey. For now the Anthropic one looks better but it may be that we would want to dynamically switch based on which is more reliable at any given time.

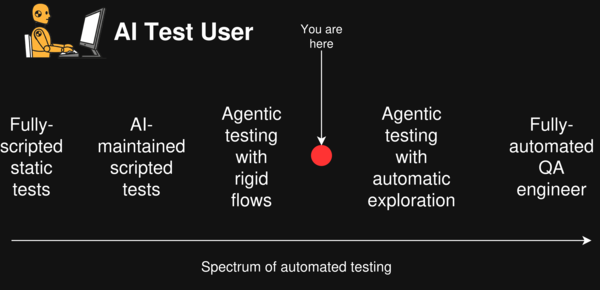

There is a spectrum of automated website testing, from fully-scripted static Playwright tests on one end, through AI-maintained Playwright tests, agentic tests with rigid flows, agentic tests that explore the site on their own and work out what to test, up to a fully-automated QA engineer which would decide on its own when and what to test, and work with developers to get the bugs fixed. AI Test User is (currently!) positioned somewhere between "agentic tests with rigid flows" and "agentic tests that explore on their own".

There are a few approximate competitors in the space of AI-powered website testing. QAWolf, Heal, and Reflect seem to be using AI to generate more traditional scripted tests. Autosana looks to be more like the agentic testing that we are doing, except for mobile apps instead of websites. I'm not aware of anyone doing exactly what we're doing but I would be surprised if we're the only ones. It very much feels like agentic testing's time has come, and now it's a race to see who can make a viable product out of it.

And there are further generalisations of the technology. There is a lot you can do with a robot that can use a computer like a human does. Joe Hewett is working on a product at Asteroid (YC-funded) where they are using a computer-using agent to automate browser workflows especially for regulated industries like insurance and healthcare.

Interested in AI Test User? Submit your site and we'll test it today. We're also interested in general comments and feedback, you can email hello@aitestuser.com and we'll be glad to hear from you :).