Last modified: 2025-03-29 10:09:45

< 2025-03-27 2025-03-29 >There are kind of 3 classes of thing I still need to do:

Core problems include:

Straightforward things:

Transform a property of each node instead of a separate propertyTransform part of each node, support extruding sketches perpendicular to their plane instead of always along ZUser interface:

I think focus on the "core problems" for now.

Bounding volumes give us:

So bounding volumes would be really helpful.

I previously thought that spheres would make good bounding volumes, but the problem is they don't work very well on shapes that are long in only one or 2 axes, because they get very large in all 3 axes.

I had thought that axis-aligned bounding boxes were more complicated to implement, but ChatGPT has given me:

Primitives:

- Sphere(r): [-r, r]^3

- Box(x, y, z): [-x/2, x/2] × [-y/2, y/2] × [-z/2, z/2]

- Cylinder(h, r): [-r, r] × [-r, r] × [-h/2, h/2]

Boolean operations:

- Union(A, B): AABB = min(A.min, B.min), max(A.max, B.max)

- Intersection(A, B): AABB = max(A.min, B.min), min(A.max, B.max) (only if AABBs overlap—otherwise it's empty)

- Difference(A, B): Same as AABB(A), unless you're trimming off a lot with B

Transformations:

- Translation: Add vector to both min and max

- Scale: Multiply each component by the scale factor (be careful with negative scales—flip min/max if needed)

- Rotation: Apply rotation matrix to all 8 corners of the bounding box, take the AABB of those points

Which doesn't look too bad. Maybe I just do that?

OK, I did get most of the way through this, before I realised that the bounding box can change based on your properties. So maybe the bounding box should be computed with Peptide? Maybe not necessary, I already recompute the full SDF Peptide whenever the document changes, recomputing the bounding box isn't a big deal.

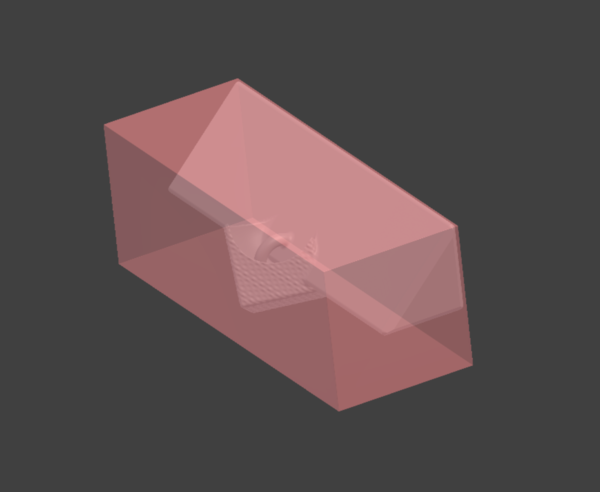

Looking good:

One issue is that it has to recompile the box shader every time, even

though it hasn't actually changed, because it makes a new BoxNode etc.

which means the uniform names have changed.

I could have it set the size and centre position as uniforms and then never have to recompile the bounding box shader. Ignore for now, that's a "straightforward thing" rather than a "core problem".

My work keyboard was typing random hyphens throughout the day yesterday. So the plan is to plug it in and see if the problem comes back.

So far it seems fine. I'm going to put some isopropyl alcohol in the switch and leave it at that.

The next core technology problem is: how do we import STL files?

Maybe we'd want to store a sparse octree of lower bounds on distance to the surface. So imagine start by building just a voxel grid of distances to the surface. And then for 2x2x2 leaf regions that are all inside or all outside, we pick the value closest to 0 and coalesce the 8 children into 1 larger voxel. And repeat until done.

dfs(node, pos, level):

if node.leaf:

node.dist = meshSdf(pos)

return

for i = 0 to 7:

dfs(node.child[i], pos + childPos(i, level), level+1)

for i = 1 to 7:

if sign(child[i].dist) != sign(child[0].dist):

return

node.dist = sign(child[0].dist) * min(map { abs(child[i].dist) } (0..7));

node.leaf = true

for i = 0 to 8:

delete node.child[i]

Where childPos(i, level) tells you the offset to the ith child at

the given level of the tree.

So this actually gives us an octree of an SDF. Is not really specific to importing meshes, it's more of a general method to optimise an SDF (on the basis that iterating a few levels of octree nodes to rule out a hit is much more efficient than evaluating a complex SDF).

But how does meshSdf work? And how do we then make an

efficient shader to use the octree?

Shader is:

But how do you pass the octree to the shader? With a 3d texture?

And, in fact, checking the signs of the child values is not really right. What we want to do instead is check if the child value is too far away from what linear interpolation would tell us. If not, we can safely delete the node regardless of whether it crosses the surface. And otherwise, we can't safely delete it even if it doesn't cross the surface, if we want the distance field to work. (But we could relax that, if we literally only care about the surface, and say that we'll coalesce nodes if they all have the same sign or if they are all approximately predicted by linear interpolation).

And I guess meshSdf() can iterate over every triangle and work out

the distance from the point to the closest point of the triangle. And

tell if it's inside or outside based on... normals?

So maybe we actually do this as 2 things: firstly, a node that can turn

an STL into a meshSdf() that is an exhaustive union of all its

triangles. And then we have a "Simplify" node or something that does

the voxelisation, which can be added by the user anywhere in the

tree. And we implicitly Simplify mesh imports.

So the naive meshSdf() would be:

meshSdf(p):

d = 0

for each triangle t:

dt = pointTriangleDist(p, t)

if (dt < d) d = dt

return d

But in Peptide. And pointTriangleDist() is...? Don't know, AI can

figure that bit out.

OK, this is working a bit!

Showing a tetrahedron.

It has a hack where it needs to give the triangles "thickness" in order to work, which suggests the inside/outside testing isn't working.

Intersecting it with a box, it is very obvious that all of space is getting classified as "outside", which means the inside/outside test is definitely not working.

There are 2 methods here:

I prefer the 2nd option because it doesn't care about normal directions or winding order.

I can't get the code right though, and neither can Claude, so we're struggling.

https://www.shadertoy.com/view/ltf3W2

This shadertoy has a "bevel" smooth-min, could see if it works better than my current chamfer code?

Yeah it does, I'm using that version now.

https://erleuchtet.org/~cupe/permanent/enhanced_sphere_tracing.pdf

They point out that you can ray march with a step size larger than the value from the SDF, and as long as the sum of the previous distance and the new distance is more than your step size, you know you didn't step through the surface. If not, step back a bit and revert to normal ray marching.

They do point out that it performs worse than regular raymarching on GPU because of thread divergence caused by the branching? And my observation is that my renderer performs worse with this technique. But it does seem like it ought to help.

The last paragraph is:

For implementations on GPUs, further research is also required for reducing computational penalties incurred in image areas where the number of steps required for con- vergence is highly non-uniform, thus leading to diverging threads when execution is performed on GPUs. A good heuristic for estimating the number of iterations required for each pixel would allow for reordering the threads into groups of similar workload and lead to a better utilization of the massive parallel computation power available.

I'm testing this by changing the "omega" or "over-relaxation" factor, and then noting down the "scale" at which the renderer stabilises. Also I've hacked it to adjust the scale if the fps ratio is more than 1% away from 45 fps, instead of the default 10%. And just loading up the default scene and waiting for the "scale" to get stable, averaged across 3 runs.

So any value greater than 1.0 gives worse performance than 1.0. And if I delete the over-relaxation code entirely I get about scale 1.80. So yes, definitely it performs worse on GPU than naive sphere tracing.

< 2025-03-27 2025-03-29 >