Last modified: 2025-03-22 10:02:03

< 2025-03-20 2025-03-22 >I'm going to make a ShaderLayer class that has a compiled shader

and a bunch of uniform values. The "renderer" will take a series of

"ShaderLayers" to draw. The "app" will keep one ShaderLayer for the

primary object and one for the secondary object, if any, and pass them

both to the renderer.

And then the renderer is dealing with OpenGL stuff, but the rest of the application is just dealing with setting uniforms in the ShaderLayer.

Great, working!

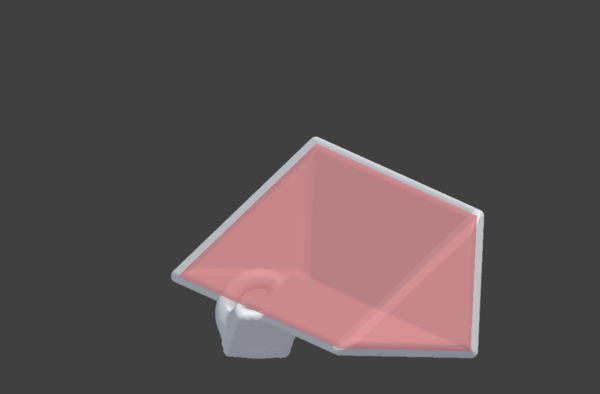

And the secondary object is now always shown with genuine reduced opacity, rather than being drawn solid but blended on top, so we do get to see the inside of the secondary object now:

If the first point it not at (0,0) you get this:

It still has a surface point at (0,0), and then randomly can't decide whether it is inside or outside for some region?

Claude debugged it for me, fixed now.

But the "3d distance" thing isn't working. Ah, it's because I was

overriting p at the start of the function to turn it 2d, and then

later on trying to access its Z coordinate.

I don't currently have a way to do the rotateToAxis() stuff with

Peptide.

So I need it to have a mat3 type, and I need to implement:

Expression simplification seems to be taking quadratic time. Seems wrong.

It's actually at the point now where simplifying the expression into a DAG is taking longer than shader compilation!

It actually seems to work quite well if I skip simplification.

Without simplification we get 48 fps at resolution scale 2.519 with 10 copies of the revolved sketch with blend radius 1 at spacing 20mm.

With simplification, and the same model but only 6 copies, we get 47 fps at resolution scale 2.832.

Cache size is:

So the cache size is not getting crazy large, what about "key" evaluations?

I don't see why adding 1 more copy quadruples the number of key

evaluations. I think it's because smin() basically references

its arguments 5 times.

I think this is not recognising when it has already simplified a

particular object. I made it set the objects in the Map as well

as the stringified versions. And then we get:

Much better.

I am not sure whether I want to be explicitly tracking bounding spheres because computing them turns into a lot of work.

But I do need to know bounding volumes in order to provide domain repetition-based patterns.

One option would be that we provide a checkbox to enable domain repetition, but we don't implement anything to check how many objects are overlapping. Maybe we leave it up to the user to say how many of the neighbours need to be unioned?

But we still need to know the object centre in order to work out which area of space to map on to.

Is tracking centres really any easier than tracking bounding spheres?

Maybe just suck it up and track bounding spheres?

Interval arithmetic in Peptide

I want to add support for interval arithmetic to Peptide.

So the idea is we'd be able to compile to GLSL code where the raymarching binary searches over an interval.

You initialise the "ray" to have z coordinate spanning from -1000 to +1000 and then evaluate the sdf over that interval and see if the resulting distance interval crosses 0.

If it does not then the ray doesn't hit the object and you stop.

If it does then you evaluate it on the interval from z=-1000 to z=0, if that hits the surface then you go halfway into that (-1000 to -500), otherwise you go halfway into the other half (0 to +500) etc. until you have done some fixed number of steps.

So this way you complete raymarching to some bounded precision in O(lg n) steps.

But then we get to how the representation would work:

The advantage of number 1 is that I think it is the smallest change? Unsure. The advantage of number 2 is we don't need any new types. The advantage of number 3 is that we get to only bother with intervals for sub-expressions that genuinely are intervals. The big downside of number 3 is that we have to rewrite all the SDFs.